TMTB Weekly: Gemini 3 and The Shifting AI Landscape

Happy Sunday. Another wild and fun week in Tech land…this is what it’s felt like the past few months being a Tech Investor vs. non-Tech:

Last week, we wrote how we were undergoing a new paradigm shift in the AI trade after Sam’s Splurge, with increasing questions and scrutiny versus the first 3 years of the trade where most AI infra stocks acted as a monolithic block. If Sam Altman’s spending binge opened up the pandora’s box of existential AI questions, the Gemini 3 release this week delivered an even bigger shock to the equation, further reinforcing our view that the AI trade has shifted into a fundamentally different regime.

Describing the market environment last week, we said: “The market is currently doing what it always does after a narrative/paradigm shock: digest, recalibrate, reassign risk premia.” The market did more of that this week, beginning to price a world where Google is the dominant LLM. Over the last few days, we have found ourselves asking a lot more questions than we currently have answers to. Many of the investors we talk to are in the same boat. Among those:

How sticky is ChatGPT and how strong is their first mover advantage? How will GOOGL’s increasing advantage in multi-modal AI shift the competitive landscape of chat-bot LLMs? Does OpenAI pivot? What does that mean for the AI infra space?

ChatGPT has proven exceptionally sticky, largely due to the “first mover” advantage. Does Gemini 3 release change this? We’re about to find out.

I think for the vast majority of consumers who use chat LLMs, there is unlikely to be any big difference between Gemini 3 and ChatGPT 5.1, so those who have ingrained habits, saved chat histories, and developed" “muscle memory” for ChatGPT’s interface will likely stick with it.

While no big shift in users would be a good thing for ChatGPT, it also cements an important point: Chat LLM functionality is plateauing.

For power users, it might be a little different. For me personally, I find G3 Pro faster and crisper than my previous go-to: Chat-GPT 5 Heavy Thinking. While I still use ChatGPT Pro for more complex tasks, my guess is I’ll likely shift most of those workloads to Gemini Deep Think when it comes out.

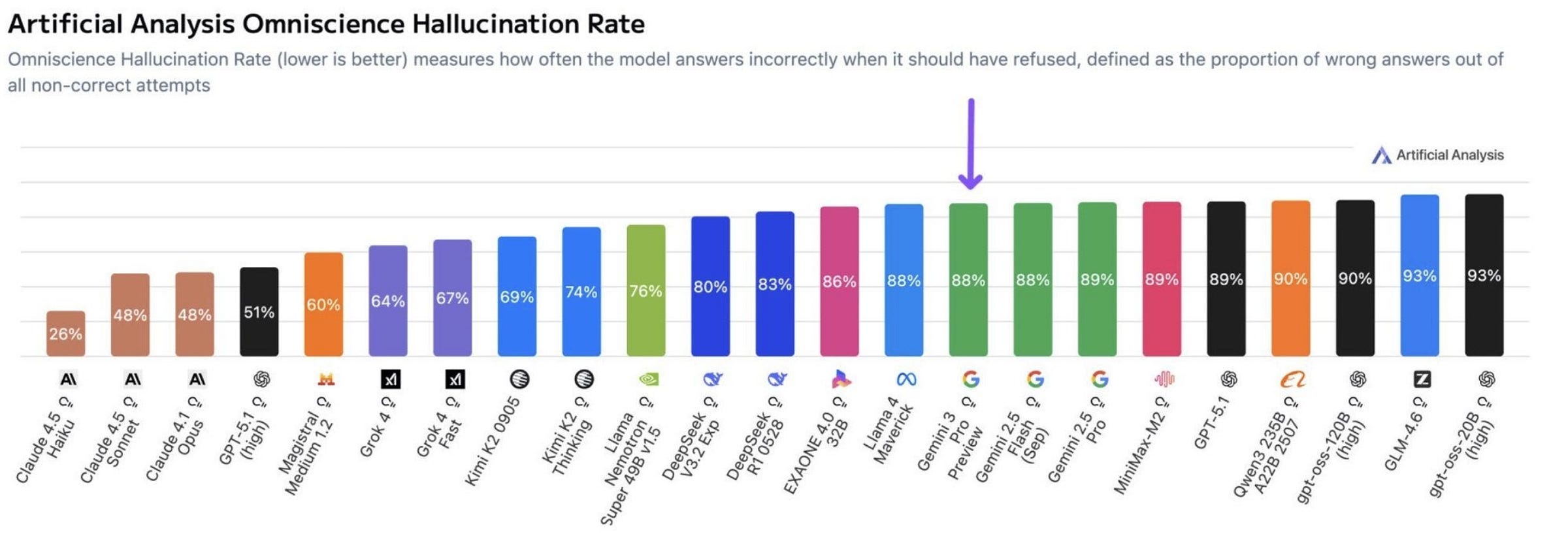

While Gemini 3 benchmarks are overall stunning and lead the pack, along with it being a very efficient model for back-end tasks, it isn’t perfect either — Hallucination rates are much higher than Chat-GPT 5.1:

Reading reviews on X and Reddit, many still prefer Codex and Sonnet 4.5 to Gemini 3 Pro, which still has low rate limits:

The Gemini 3 launch has lacked the virality factor so far, but it’s possible the release of Nanobanana Pro (already in the wild) and its next-gen video model Veo4 could change that. We have seen with both ChatGPT Studio Ghibli image model release, Nanobanana’s initial release, and the Sora 2 release that image and video are much more likely to drive virality than chat LLM upgrades. These viral hooks are more likely to entice users to break their habits and try a competitor.

This also brings up another important issue, that ties into plateauing chatbot functionality: the rate of change in improvements in models will likely be increasingly seen in multi-modal like image-gen and video creation, two areas in which having an advantage in token cost will be even more key. GOOGL just proved they are the leader here and their lead is likely to grow. This lead is likely to grow further with video as GOOG has the whole Youtube corpus of data to train on.

Does OpenAI continue to pursue multi-modal and press the pedal on spend? Multi-modal is a game of marginal costs while reasoning (enterprise) is a game of marginal value. If OpenAI continues to fight Google on multimodal consumer features, they are entering a war of attrition they are mathematically destined to lose.

One could argue deciding to shift away from multi-modal is the best thing OpenAI can do: it decouples them from the “bandwidth wars” where Google’s infrastructure advantage is insurmountable and allows them to reallocate previous compute toward reasoning, helping position them as premium intelligence layer for Enterprise rather than a commoditized content factor for consumers — something closer to what Anthropic is doing. This all depends on image/video functionality not shifting users to Gemini, which would trigger the bear case for OpenAI: users declining and ultimately hurting their ability to monetize the chat-bot through ads.

And we haven’t even touched on the potential emerging advantage for Google’s “all-in-one” ecosystem - not only for multi-modal - but Gemini becoming an ambient layer woven into all the products many of us already use: search, gmail, workspace, Android (and likely iPhone in the future). The competitive landscape is increasingly shifting from “who has the smartest chatbot” to “who has the most integrated workflow” — well, Google has both right now.

This leaves OpenAI in a bit of “no-man’s land.” They can either bleed cash fighting a multimodal bandwidth war they can’t win, or retreat to the Enterprise niche (the Anthropic strategy). But even that retreat is dangerous, as Google leverages its “All-in-One” ecosystem to weave Gemini into the daily workflows of billions of users via Android and Workspace. In addition, Gemini 3 does very well with back-end enterprise tasks, and some of our checks already show some share shift there.

Lastly, while bulls will say Gemini 3 showed scaling laws still hold, bears will say incremental functionality to the average user is minimal. If OpenAI decides that “good enough” is sufficient for 90% of its users and they decide to cede multi-modal, do they slow down purchases of next-gen training clusters? Seems unlikely Sam will cede multi-modal, but economic realities are more stark now than they were a couple months ago. We know OpenAI has a deep talent pool, but they also lost a lot of multi-modal talent to META’s MSL.

Is it possible OpenAI pivots R&D to efficiency and focus on making models smaller and faster rather than just smarter? We’ve seen what the Chinese have been able to do with much less compute than OpenAI is about to have. Will a pivot to “Who can run GPT-5 level intelligence locally on a phone?” rather than “Who has the smartest model in the cloud?” be the battleground OpenAI prefers to fight in?

The answer to these questions will have profound implications across the AI infrastructure ecosystem. The Gemini 3 release has accelerated how soon OpenAI and the industry need to confront these questions, among many others which were opened with Sam’s Splurge.

Taking all this into consideration, the post NVDA EPS sell-off across the AI infra space seems more on-point than many initially have posited, as the Gemini 3 release has forced investors to grapple with BIG questions with BIGGER implications.

And maybe the biggest question of all: what does this mean for NVDA’s margins? There were already concerns of peak margins given rising input cost inflation (particularly in memory) and because the Rubin ramp will initially be margin dilutive, and GOOGL’s vertical cost advantage adds an even bigger wrinkle.

Right now, the vast majority of the profit in the AI stack is being captured by one player: Nvidia. Others like OpenAI and Anthropic are operating at near-zero or negative margins to fund Nvidia’s 75% gross margins. This didn’t seem to be a problem for investors until Sam’s Splurge and Gemini 3 brought the questions to the forefront. To keep the ecosystem healthy and thriving, to keep the paradigm “always moaaar compute,” will NVDA keep pricing lower than they otherwise would have for next-gen GPUs? I understand the TCO math for GPUs - at the current token cost, it’s incredibly profitable for whoever buys the racks. I’m also still generally bullish on compute. My only point is there are questions being raised by Gemini’s release that could shift the industry dynamics in unexpected ways, which means less room for arguing a 30x multiple, which we had previously done. GOOGL is already getting more aggressive at selling TPUs externally, and (along with AMD’s more competitive ‘26 lineup) we also think G3’s release and GOOGL’s demonstrated TPU cost/performance advantage will encourage companies to more aggressively pursue their ASICs strategy, which all add more competition to NVDA.

Still, while this week has muddied the narrative significantly — and we love clean narratives here at TMTB — there’s a price for everything. At the current price, NVDA is trading at 20x our 2026 EPS and despite unanswered questions, that seems like a pretty good price to pay.

Recent weeks have forced investors to confront the unforgiving speed of the AI shift. It feels like that scene in Interstellar where time has become dilated: one hour in Tech investing is 7 years back in the SP500. In this environment, having the mental agility to aggressively update priors and remain radically open-minded to the unexpected remains a critical edge. Should be a fun 2026.

To access full TMTB Weeklys, please subscribe to TMTB Pro. For more information, e-mail sales@tmtbreakout.com