TMTB Weekly: From ASS to ACCel

You are reading a TMTB Weekly, a part of TMTB Pro. For a trial or more info on TMTB Pro, please reach out to sales@tmtbreakout.com for more info.

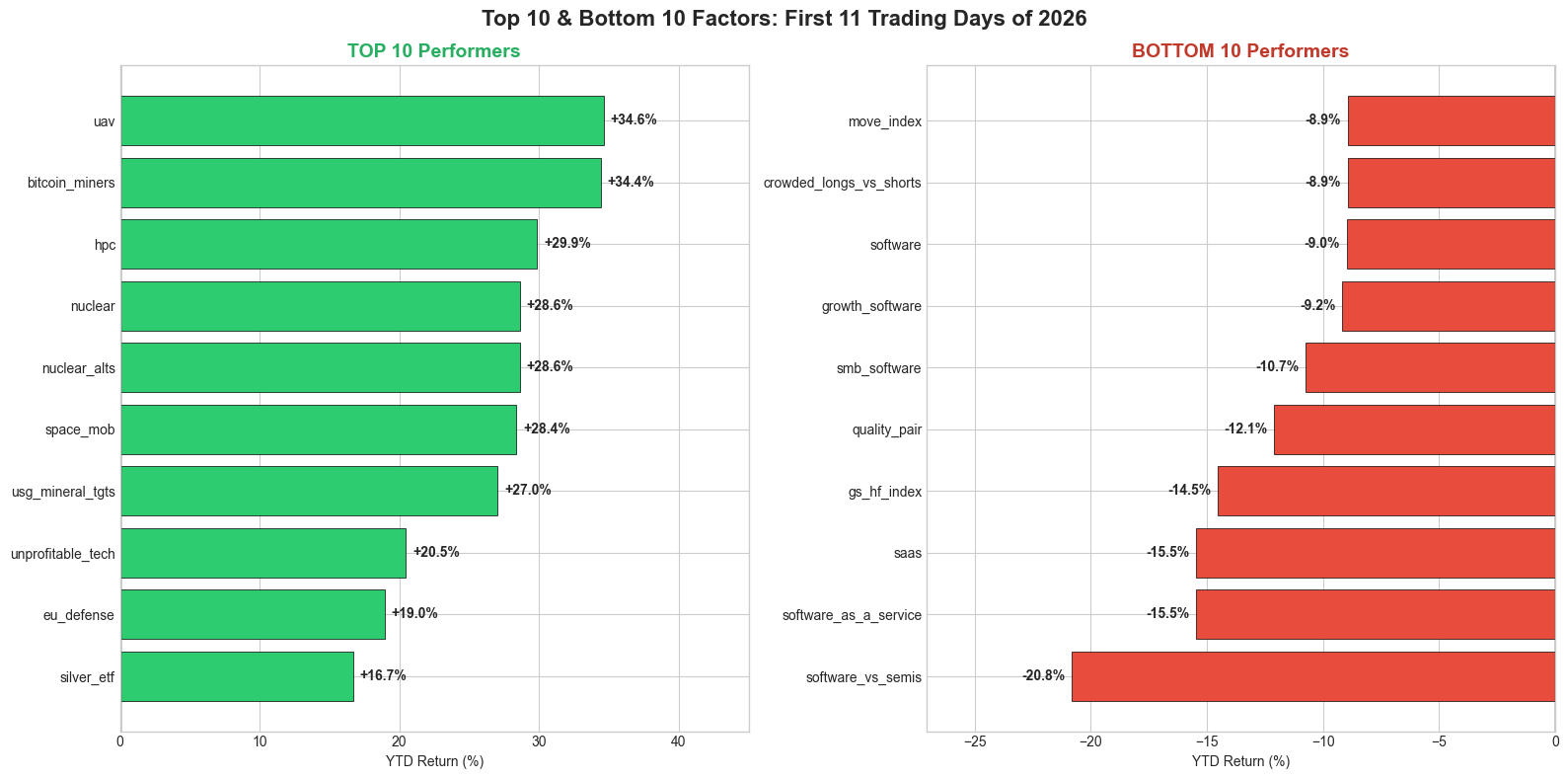

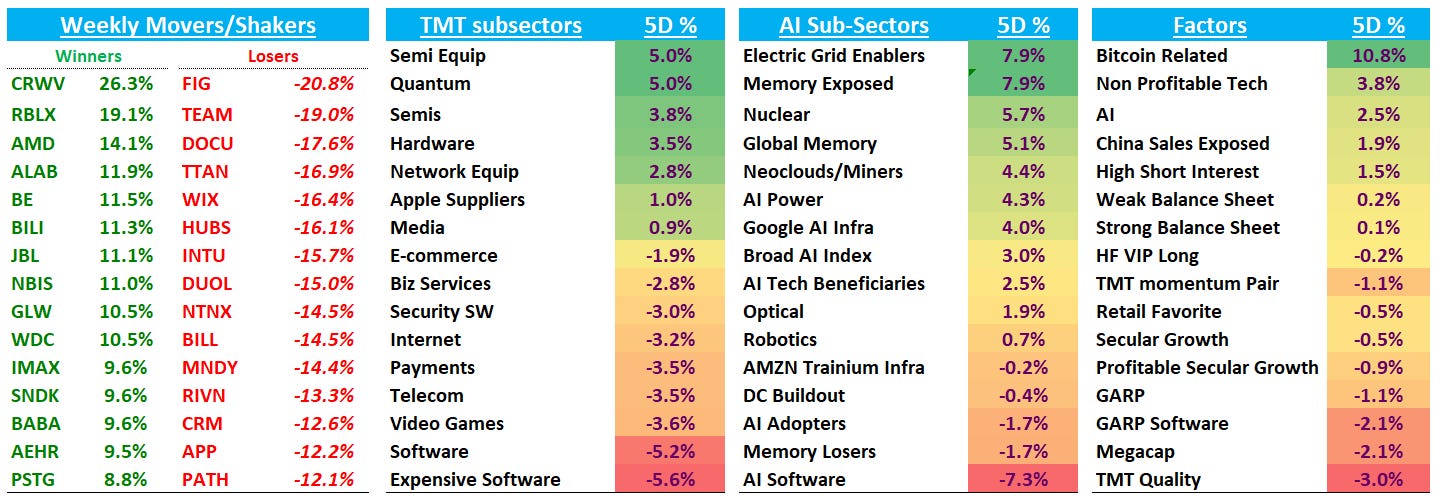

Happy Monday. Quite a start to the first two weeks of the year. The two big defining trades in Tech so far this year have been L IWMs vs S QQQs and L Compute/Memory vs. S Software. Outside of those it’s been a tricky environment as narratives outside of memory/storage feel unclear and unsteady. THE GS HF VIP vs. most shorted index is down 14% YTD, showing there is pain out there in the HF community despite extreme moves to the upside in some names (SNDK +75% / BE +70% YTD for example):

Just checkout last week: plenty of semis up 10%+ while it was easier to find a sw stock down 10%+ rather than green on the week:

The price action in semis and software these past two weeks has been driven by an improvement in AI sentiment, largely driven by a slowly-then-quickly realization among the investor community surrounding Claude Code and its potential implications. Here’s what we wrote on the first day back from holidays which laid out the shifting environment:

The AI Vibes are back in full force — kicked off with the OpenAI fundraising news right before the holidays marking the lows and accelerated with a lot of continued positive news flow over the break, the most important in our view being how good Claude Code/Opus 4.5 is. Among the many major releases we’ve seen over the last three years around AI that have wow’d us, this one feels like a top-three moment in terms of real, tangible step-function improvement rather than incremental progress. We find it hard to disagree that it’s “coding AGI.” While the discussion was loud on X, given the holiday break, talking to some other investors we got the sense it went relatively under the radar. But to us, it played a not so small part in the moves on Friday with the semi vs. software spread blowing out.

News of OpenAI’s fundraising, coupled with the release of Claude code, has helped move tech sentiment past the dominant 'After Sam’s Splurge' (ASS) narrative. Regarding the capital raise, we continue to reference a key post from user WWW in the TMTB chat, posted the very day news of OpenAI's ambitions broke:

Some of you are covering shorts tomorrow. Some of you are still wondering why your AI longs are underperforming despite crushing numbers. To speed up the grieving process on both ends - this is a CONFIDENCE GAME and it has always been. Full stop. There was never any doubt about 2026 AI spending and most of the market doesn’t have a horizon past Q1 2026. This is TMTB’s “ASS” in a nutshell. The moment equity investors start citing CDS pricing means it is a confidence game.

If you aren’t flipping long AI on OAI raising $50b let alone $100b, then you haven’t come to terms with it yet. This was never about AI, it was about funding OAI. If OAI can fund the next 3 years, then “funding secured” and the party continues through 2027.

While we think there are still some elements of ASS that aren’t going away — more scrutiny on idiosyncratic stories vs. rising tide lifts all boats, focus on the grid, questions around AI impact on unemployment, memory names being favored — we have shifted into a new overarching paradigm for the AI trade: the “After Claude Code” era (“ACCel”). While we think the near-term hypecycle surrounding Claude Code is likely 80% through, we think agentic coding “AGI” has significant implications that will work its way into the market throughout this year and further.

Let’s start from the top…

For years, "AI for Code" meant sophisticated autocomplete—tools like GitHub Copilot would suggest the next few lines of code based on the context of the current file. This was a productivity booster, but it required a pilot (ie developer) to drive every action, review every suggestion, and integrate the code into the larger system. Then for a few years after ChatGPT, we entered the era of “Gen AI” coding,, where the interface shifted from the editor to the chat window. Developers could now treat LLMs as conversational programmers. However, this created a fragmented workflow, in addition to capability questions: the human was still the bridge, constantly copying and pasting context into the chat and manually moving the solution back into the codebase.

Then in late 2025 came the release of Claude Code, which marked the transition from "Gen AI" coding to "Agentic AI" coding. Unlike a chatbot or an autocomplete plugin, Claude Code operates directly in the user’s terminal in a simple way that has demystified operating in that environment for many (including us). It possesses "agency"—the capacity to act autonomously, select actions based on internal objectives, and execute complex workflows involving file manipulation, testing, and deployment. In other words: an "agent" receives a goal and then plans the execution. It explores the codebase, identifies the necessary files, writes the code, runs the tests, reads the error logs if the tests fail, creates a fix, and tries again. This "self-healing" loop, its ability to operate without human intervention, is the defining characteristic of the stack in the era of ACCel.

With Claude Code, came several key novel functions (Anthropic keeps iterating and shipping product at an amazing pace with Claude code, so these are just a few among many that continue to be released):

Deep Context & Memory: The ability to retain "institutional memory" across a project. Agents now create and maintain their own documentation (e.g.,

CLAUDE.md) to track architectural decisionsTool Use & Orchestration: Agents can now call external tools programmatically. They can access the file system, run Bash commands, query databases, and even spawn "sub-agents" to handle parallel tasks. Perhaps one of the most significant innovations is this ability to spawn subagents: A primary agent can delegate distinct tasks—such as spinning up a backend API—to a subagent while simultaneously building the frontend interface.

Sandboxing and Security: A critical barrier to enterprise adoption was the risk of AI hallucinating destructive commands. CC comes with the implementation of rigorous filesystem and network sandboxing

Platform Agnosticism: While tools like Devin are browser-based (although CC has a Chrome extension), Claude Code integrates into the local environment, allowing it to become a seamless part of the existing DevOps infrastructure.

Then this week Anthropic released Claude Cowork. We think that’s only the beginning of a radical shift. It paves the way for a wave of a new form of applications, ones that are no longer static code, but a dynamic interface built upon and driven by Claude Code. Interfaces and apps like this will make it a lot easier for the average user to dive in and adoption to increase.

Ok, enough of the boring technical stuff, let’s dig into the good stuff: the hype. Here are just a few select tweets/quotes regarding Claude Code over the last two weeks:

Olivia Moore (Partner at a16z):

Claude for Chrome is absolutely insane with Opus 4.5

IMO it’s better than a browser - it’s the best agent I’ve tried so far

SHOP CEO Tobi Lutke:

I shipped more code in the last 3 weeks than the decade before. The top AI models / agentic systems right now are an entirely different thing to what people used until the beginning of December.

How much of Claude Co-work did Claude Code write (let’s ask the creator of Claude Code):

Boris has said he runs runs 5-10 parallel Claude instances simultaneously while coding. His team pushes around five releases per engineer per day.

Jaana Dogan (Principal Engineer at Google):

‘m not joking and this isn’t funny. We have been trying to build distributed agent orchestrators at Google since last year. There are various options, not everyone is aligned... I gave Claude Code a description of the problem, it generated what we built last year in an hour.

Andrew Curran on what’s next:

There will eventually be a 'Claude Code' moment for every sphere of human endeavor, because this generalizes to everything and continues to scale. Anthropic went enterprise strategically, but Anthropic is not a coding company. Anthropic is an intelligence company.

Claude code is probably AGI in hindsight in 18 months.

i have never experienced more flow state in my entire life than the past few weeks

the unbearable opportunity cost of literally doing anything other than making sure your fleet of agents are running 24/7 is a ridiculously motivating force

Claude code is actually as good as all the insane Silicon Valley people on your timeline are saying

Dean Ball on why this is flying under the radar among many:

I agree with all this; it is why I also believe that opus 4.5 in claude code is basically AGI.

Most people barely noticed, but *it is happening.*

It’s just happening, at first, in a conceptually weird way: Anyone can now, with quite high reliability and reasonable assurances of quality, cause bespoke software engineering to occur.This is a strange concept. Most people, going about their day, do not think about how "causing bespoke software engineering to occur" might improve their lives or allow them to achieve some objective. If you have deeply internalized the general-purpose nature of "software," and especially, "things achievable by well-orchestrated computers," you understand that in some important sense, almost all human endeavor can be aided, in some way or another, by software engineering. A great deal of it can be automated altogether.

It will take time to realize this potential, if for no other reason than the fact that for most people, the tool I am describing and the mentality required to wield it well are entirely alien. You have to learn to think a little bit like a software engineer; you have to know "the kinds of things software can do." You have to learn also to think like the chief executive of a thousand small (but fast growing) teams of software engineers who possess expert-level knowledge of virtually all domains of human intellectual life. Grasping all of this, and learning how to embody it, requires humans to adopt a strange and new kind of agenticness. Not all of us will.

Sequoia partner @sonyatweetybird says we’re going from the age of product-led growth to the age of agent-led growth.

“You see this most clearly if you’re using Claude Code actively. It says, ‘Hey, for a database, you should use Supabase. For hosting, use Vercel.’ It’s choosing for you, the stuff you should be using.”

“Product-led growth brought us closer to the vision of ‘best product wins,’ but ultimately people are still lazy. They can’t read all the reviews, and they kind of default to what looks cool on the website.”

“Whereas your agent has infinite time to go and make these choices for you. It can go and read all the documentation, read all the user comments, and figure out [what you need] for your use case.”

Nikhil Krishnan on beginner’s use cases from Claude Code:

I’ve spent the last 48 hours in Claude Code - as a non-technical person it’s basically unlocked three very big things for me

The ability to interact with APIs generally - again, as a non-technical person one of the big barriers to running the business has been touching APIs. For example, what you can do in Stripe in the non-developer portal vs. through the API is night and day.

The ability to thread things together - another issue has been threading several different products we work with together to do cohesive tasks. Zapier gets you part of the way for triggers, but Claude Code let’s me do way more complex things that touches multiple things simultaneously

Run something regularly - being able to set a script and run it regularly with this level of ease is a game changer. In about an hour I set up a daily email to myself that tells me the top 3 emails I need to respond to based on a priority scoring system we made together that pulls data from a few different places.

Ryan McEntush from a16z on how easy it is to go from idea to deployment:

“i built this entire website in two days with claude code. i could do everything i wanted — idea to deployed app was fast as hell. data i used to pay for can now be pulled/inferred by LLMs w/ web search. transformation & classification, etc. can just be handed off to an LLM. gemini flash is basically free. i didn’t even need to think about a beginner-friendly backend, i just used whatever was cheapest. didn’t matter, claude walked me thru it. and somehow claude understood my exact UX vision even though i barely had the words for it.

in a past revops role, there were so many internal tools that were outrageously expensive for what felt like relatively simple functionality. now i could probably rebuild a better version in a couple hours.

i’m starting to go through all my personal tool subs and write designs for my own version. even all my spreadsheets should have a GUI. it’s hard to see how this doesn’t change everything.”

Damn, it is so much fun to build orchestrators on top of Claude Code.

You would think the terminal would be the ultimate operator.

There is so much more alpha left to build on top of all of this. Include insane setups to have coding agents running all day.If I had agentic coding and particularly opus, I would have saved myself first 6 years of my work compressed into few months.

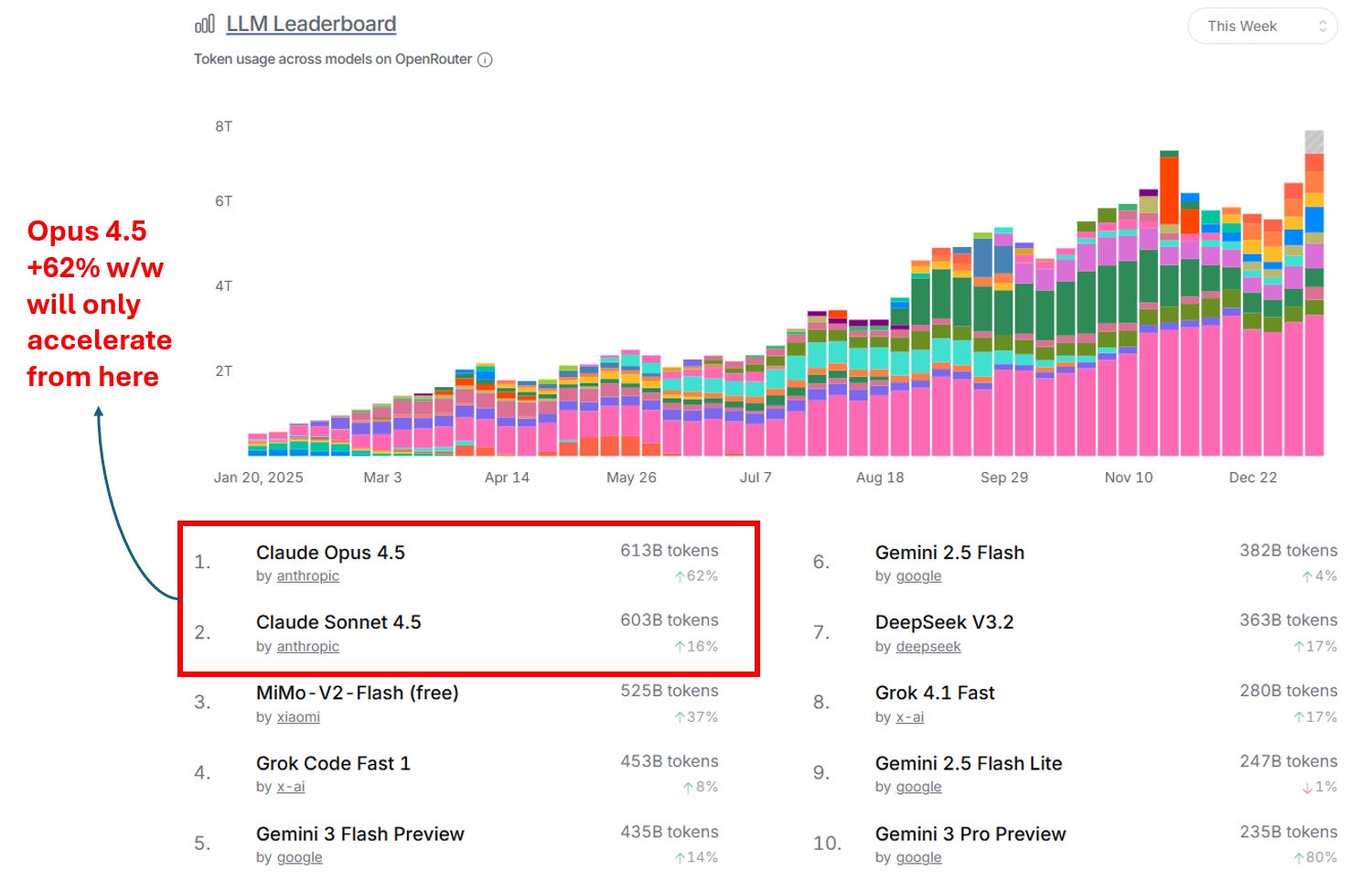

Opus 4.5 has already risen to the top of token usage at OpenRouter:

How about the pushbacks?

While the large majority of feedback I have seen is overwhelmingly positive, some pushback I’ve read mainly centers around its high resource demands, including excessive CPU usage, frequent freezes, and large token consumption that inflate costs and limit scalability for complex projects exceeding a few thousand lines of code.

Here are some snippets from X. From @linkmarine

I've been coding for 40 years. Claude is great for generating a large amount of code. BUT it definitely does not write the BEST code. Wrong file format selection that doesn't work for steaming. Wrong use of API to limit memory USE. Wrong loss function for a model task. Completely wrong learning rate recommendation for a task. I have a long long list of stuff Claude has done wrong (sometimes exactly the opposite of what should be done). Only senior engineers would know. But Claude can still generate huge amounts of code and is insanely fast to iterate

Long thread here on drawbacks as well.

We aren’t naive. We know real-world integration of agents will be messy. While agents excel in isolation, they often stumble when navigating the undocumented "tribal knowledge" of legacy enterprise codebases. And I’m sure there will be many more stumbling blocks and news of “Agents gone wild” over the next year, including cybersecurity issues which Anthropic has already highlighted.

Still, I think we all know after 3 years of AI progress that these coding agents will only get better and better, especially as Anthropic has lit the fire of competition into other labs like OAI and xAI.

So how much compute does Claude Code use? TL;DR: A lot!

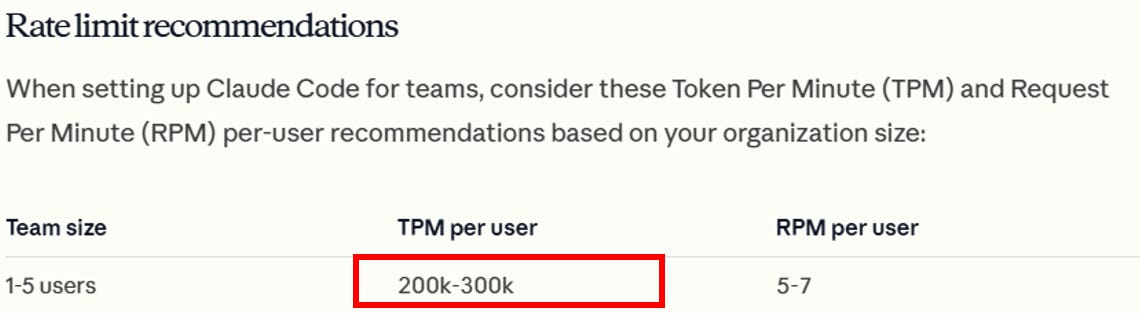

Anthropic’s own guidelines set recommending a limit of 200-300k Tokens per minute per 1-5 users. Yes, per minute.

And this is what Anthropic recommends for Sonnet 4.5 which is a much lighter model than Opus 4.5.

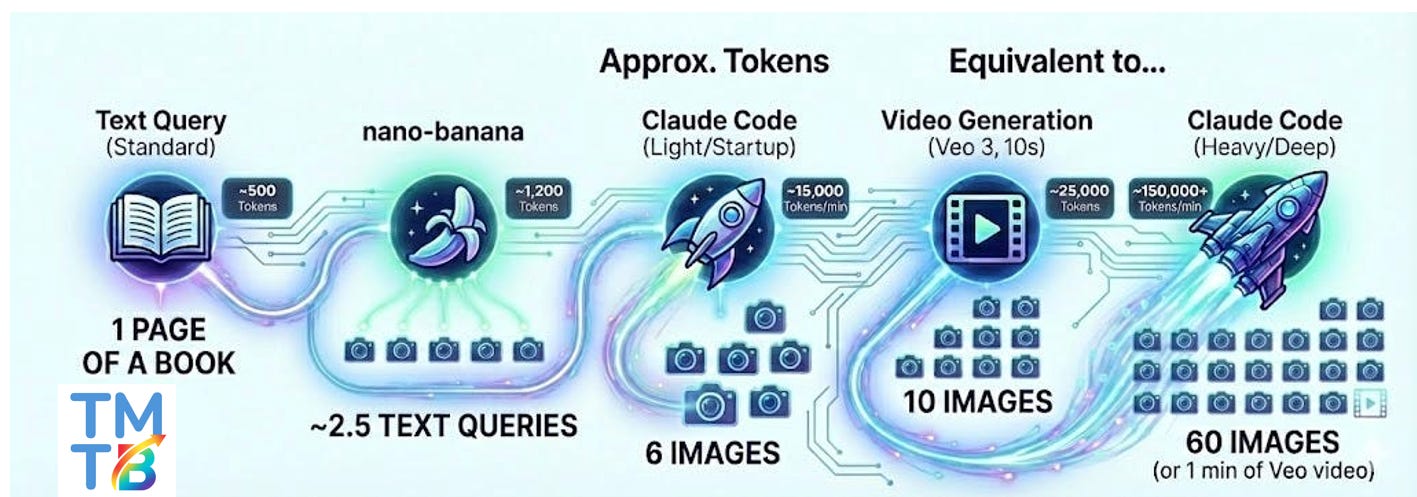

While not all users will use that many, there is a compounding cost to Claude Code in terms of token usage:

Minute 1 (Startup): You open a small project. Context is small (3 files). The agent thinks once. Cost: ~15k tokens.

Minute 10 (Deep Work): You have 20 files open. The agent has a long history of “trying” things (errors, logs, diffs). Now, every single time it tries to fix a bug, it has to re-read that massive history. Cost: ~200k tokens per step.

If Claude Code is the catalyst for agentic adoption, it’s not hard to see why this will be a massive boon to compute. So who wins?

You are reading a TMTB Weekly, a part of TMTB Pro. For a trial or more info on TMTB Pro, please reach out to sales@tmtbreakout.com for more info.