TMTB Weekly: AI Velocity Reset, NVDA CPX Winners/Losers, RDDT, MSFT, META, MDB, WDAY, INTU

Happy Sunday. Quite a week with QQQs up close to 2% led by the AI trade as the GS AI basket was +5%. It’s no surprise why. The week started off with NBIS +50% on Monday after inking a $17.4B (up to $19.4B) five year AI infrastructure capacity del with MSFT, plus a following-on $3B raise to fund the build out. On Tuesday, ORCL posted a ridiculous RPO # of $455B (+359% y/y) that had everyone triple checking the PR to make sure it wasn’t a typo, sending the stock up 35%+. A move that reminded many of NVDA’s infamous 2023 post-ChatGPT print.

If those two datapoints weren’t enough to convince you the HOT AI summer is transitioning into a HOT AI Fall, we had bullish GS presentations talking up AI demand, spreading usage, and revenue generating opportunities. We thought the CRWV presentation was worth a read in full, with the key quote:

"That problem is continuing to persist in, is honestly worsening. I would say what we've observed over the past four to six-weeks is yet another inflection in-demand"

Memory names continued to squeeze throughout the week on better hyperscaler demand and ORCL results as DRAM server pricing continues to ramp:

MU is now up 40% in the month of September alone, catching many of the shorts playing for HBM oversupply in ‘26 off-guard. Local Asia reports were out talking about MU planning to raise prices by 20-30% and suspending price quotations across DDR4, DDR5, LPDDR4, LPDDR5 product.

That’s playing 2nd fiddle to SNDK, which is up 70% in the month of September — CEO was at GS saying they “see an undersupplied market all the way through '26” and are raising prices by 10% for part of the market.

Not to be outdone, NVDA announced their new Rubin CPX chip, splitting pre-fill and decode which can speed up 6x overall using optimized GPUs for each workload and will help driven down token cost, and hence drive more usage. Semianalysis laid out the implications: This is a game changer for inference. As a result, the rack system design gap between Nvidia and its competitors has become canyon-sized…Nvidia has just made another Giant Leap, again leaving competitors very distant objects in the rear-view mirror. NVDA is already asked Samsung to double GDDR7 supply, according to Asia press.

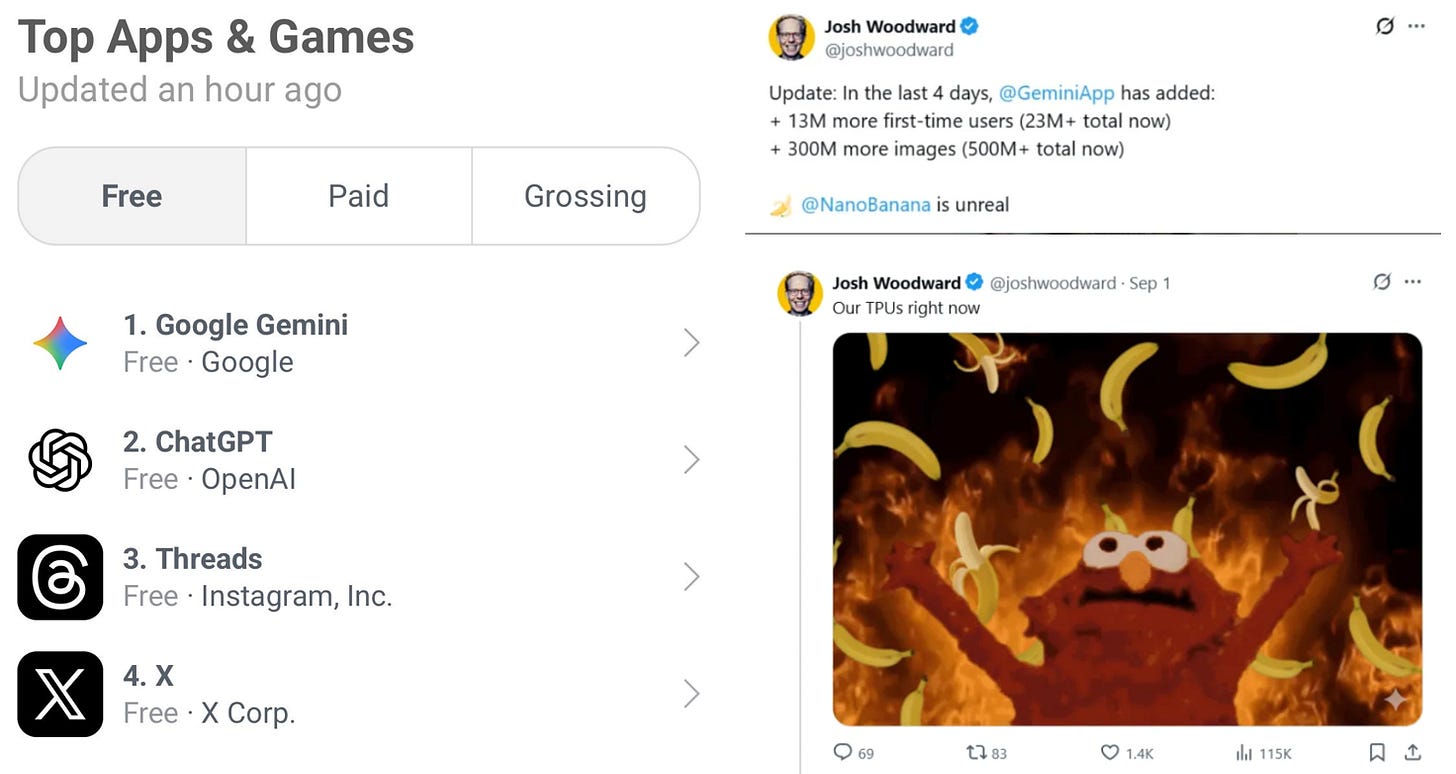

Nanobanana continued to drive people to Gemini, with it hitting top of the app charts for the first time over the weekend:

Yes, quite a week! It’s taken more than two years of investors training themselves to expect faster cycles in AI - then this week still blew through “fast”: multi-billion dollar capacity contracts, half-trillion backlogs, viral hype cycles (nanobanana) memory/pricing inflections all stacking in the same week. Quarter-long (or decade-long) arcs are showing up in the same week as better capability is driving ROI improvements which in turn is driving adoption acceleration. In other words: the “dizzying pace” of the last two years wasn’t the peak; it was likely just the on-ramp. And we don’t even have Blackwell trained clusters yet.

It was hard not come away from this week not wanting to own more AI anything (GPU/XPU arms dealers, DCs, storage, power, AI networking etc.) and we think most investors felt the same. What’s more exciting is that we’re also seeing anemic price action in AI losers, which makes for a great stock picking environment. It’s really hard to imagine a more “stacked” week than this, but we think this week was a just a preview of what’s to come in late 2025/2026. Strap in.

MACRO

Macro takes a back seat to the AI supercycle, but just barely as our view is the Fed usually always takes precedence and we are likely to see our first or three end of year rate cuts this week. Our top-down view remains the same: