SNDK/Memory: The Perfect Storm: Jensen, Engram, and the "After Claude Code" era.

Portions of this article have been sent to TMTB Pro subscribers over the last 3 weeks. For more information on TMTB Pro, please e-mail sales@tmtbreakout.com

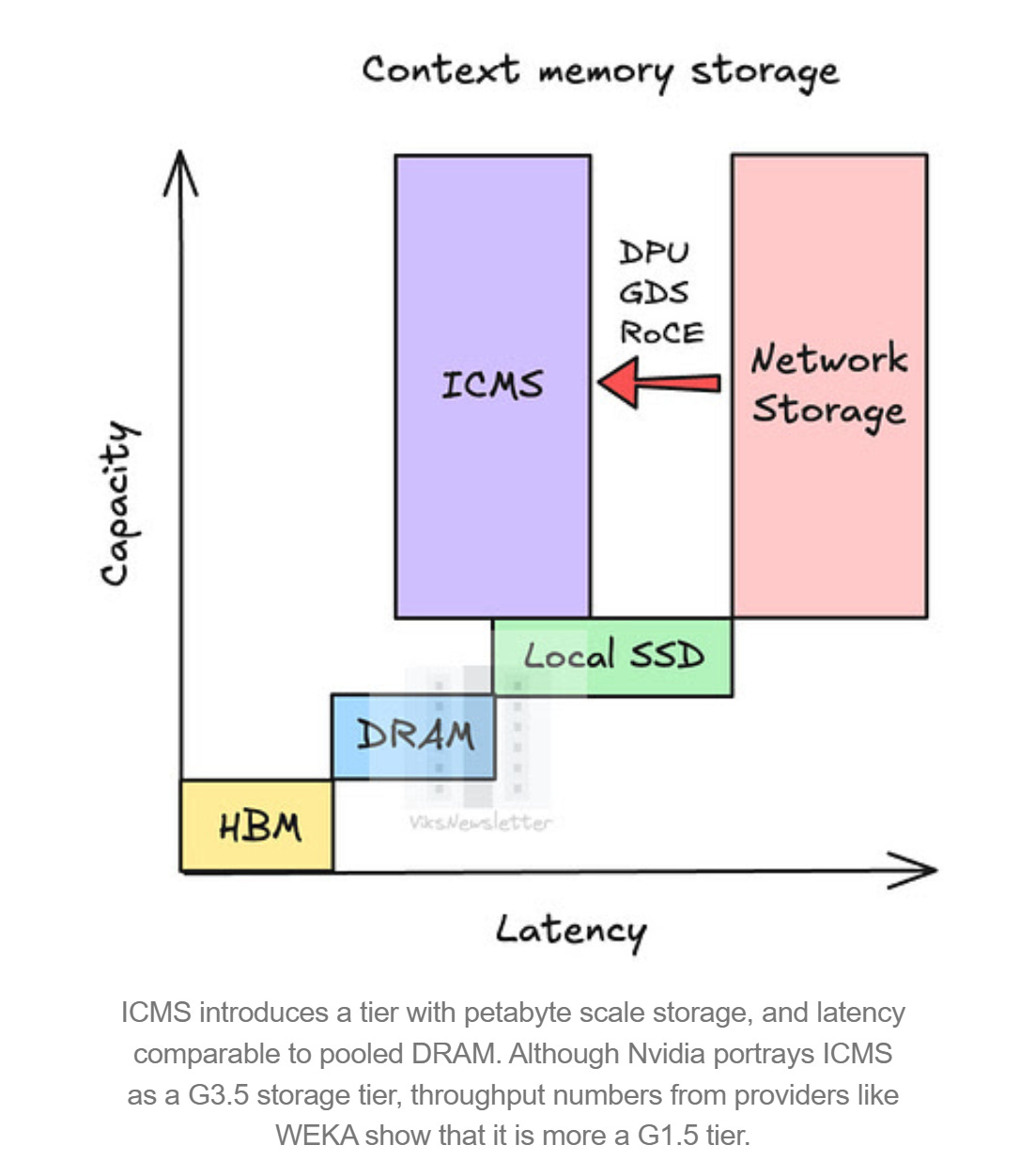

SNDK is up 100%+ in 3 weeks. Memory stocks have ripped. What changed in that time? Well YTD, we arguably got 3 new game changing demand signals for NAND (and DRAM): Jensen’s ICMS storage announcements at CES, Claude Code ramping, and Deepseek’s Engram storage architecture. That makes for arguably one of the best structural fundamental “rate of changes” that I’ve seen in any industry in such amount of short time.

Let’s dig into all 3. First, Jensen started it all at CES, announcing ICMSP:

“The amount of context memory, the amount of token memory that we process, KV cache we process, is now just way too high. You’re not going to keep up with the old storage system…Context is the new bottleneck — storage must be rearchitected.”

“You want to put it right into the computing fabric, which is the reason why we introduced this new tier. This is a market that never existed, and this market will likely be the largest storage market in the world, basically holding the working memory of the world’s AIs”

“That storage is going to be gigantic, and it needs to be super high performance. And so I’m so happy that the amount of inference that people do has now eclipsed the computing capability that the world’s infrastructure has”

Remember, offloading KV cache isn’t just about architecture: HBM3e costs orders of magnitude more per GB than NAND and is severely supply-constrained by CoWoS packaging capacity. By moving that context to an ICMSP rack, you free up the HBM for active compute, effectively increasing the throughput of your GPUs without buying more GPUs.

Jensen addressed this by unveiling the DGX “Vera Rubin” NVL72 superPOD.

The pictured layout is 4 racks of Spectrum-X (left) for scale-out networking, 8 racks of VR200 NVL72 compute (middle), and 2 racks of a new “inference context memory” storage platform (right). The key incremental here is the two storage racks: they’re essentially standalone storage to expand context windows via KV-cache offload, and should be additive for the NAND market (and NAND suppliers). In our conversations with investors at CES, IR expressed that the NAND market was already ~8% undersupplied heading into CES, and this configuration is incremental versus prior forecasts—helping explain the stock’s massive rip on Tuesday.

Here’s the math / configuration behind the incremental NAND:

Jensen said that within each VR200 NVL72 rack, each GPU has access to ~1TB of NAND in the standard configuration, and there appear to be enough slots to scale that to roughly 3–4TB/GPU within the NVL72 rack.

But the truly incremental piece is the dedicated storage racks: 16 storage trays per rack, each tray has 4 BlueField DPUs (handling storage access, encryption, and traffic steering so CPU/GPU focus on higher-value work), and each DPU manages ~150TB of NAND.

That implies 16 × 4 × 150TB = ~9.6PB (9.6k TB) NAND per superPOD, or ~9.6PB / 8 compute racks = ~1.2PB incremental NAND per NVL72 rack. If you assume ~100k VR200 racks ship in 2027 in the superPOD format, that’s ~120EB of incremental NAND demand—vs. a total NAND market that looks like ~1.1–1.2ZB (1,100–1,200EB) per year, i.e., roughly ~10% of industry demand. Now that might be a little high given ICMS largely will be used for inference vs. training but still at least MSD % of demand for new racks. This incremental demand helps alleviate any sort of downdraft we might get on consumer side of the business. Even if you assume a very draconian 15% decline in consumer demand (thought to be ~60% of the mkt in 2025), this makes up for it, and that’s without taking into account the market is already close to 10% under-supplied.

In addition, from our understanding, is ICMSP are backwards compatible. Even if a customer is still using Blackwell (GB200), they can ostensibly buy these ICMSP storage racks early as an add-on to boost their context window — this means the SNDK ramp might actually precede the full Vera Rubin compute ramp. ICMSP connects via NVDA Spectrum-X Ethernet, not the internal NV Link spine that connects GPUs to CPUs, meaning you can plug a “Rubin-class” storage rack into BW or even Hopper assuming you have the spare ethernet ports.

For a for a deeper dive on KV cache offloading check out Vik’s newsletter post this weekend).

Second, last week we got even more evidence of this paradigm shift as DeepSeek’s “Engram” architecture underscored the growing importance of offloading memory (both DRAM and SNDK) from GPU/HBM, similar to NVDA’s BlueField framework. We liked this article from Pan Xinghan on the Engram architecture, which lays out why this is so positive for memory:

Unlike MoE... Engram lookups are deterministic. The system knows exactly which memory slots are needed based solely on the input token sequence...[TMTB: this is important for SNDK as SSD latency is slower than DRAM]. Engram proves that scaling is no longer just about stacking layers or adding experts. It demonstrates that Conditional Memory is an indispensable modeling primitive for next-generation sparse models. By decoupling memory from compute, China is building LLMs that are not only smarter but structurally more efficient.

The bolded part above has big implications for NAND if it scales to models with large parameters, which the Deepseek paper says it should do. Let’s let Gemini dumb it down for us a bit:

The breakthrough in Engram is Deterministic Lookup. In standard LLMs (like MoE or dense transformers), the model doesn’t know what information it needs until it calculates it layer-by-layer (dynamic routing). This requires ultra-fast memory (HBM) because the data must be ready instantly. DeepSeek’s Engram is different: it identifies the necessary N-grams (static patterns) based solely on the input text before the deep computation starts. Because the system knows “indices ahead of time,” it can prefetch data. This prefetching capability masks the latency difference between fast HBM and slower Host Memory. While the paper explicitly validates offloading to DDR (System RAM), the architecture natively supports a “Tiered Memory” hierarchy and opens the door for NVMe SSDs (NAND) to handle the “Long Tail” of static knowledge—massive libraries of facts that are rarely used but necessary to have on hand…DeepSeek’s paper demonstrates offloading a 100B-parameter embedding table (a massive amount of data) entirely to host memory with less than 3% performance loss. The “U-Shaped Scaling Law” they discovered suggests that as models get bigger, a significant chunk (20–25%) of the parameters should be this type of static, offloadable memory….Crucially, its “deterministic prefetching” mechanism solves the latency bottleneck for flash storage, effectively turning SanDisk’s Enterprise SSDs into “slow RAM” and establishing them as the only viable infrastructure for scaling the massive, multi-terabyte context windows required by future agentic AI.

That last line is massive because NAND is still priced at a very small fraction of what DDR is — since this architecture allows it turn into “slow RAM.” it’s strategic value in the datacenter rises significantly.

Unlike V3’s focus on doing more with less, Engram focuses on doing more with massive external memory. While Deepseek v3 was a big negative catalyst for the AI trade last year, ironically, we think DeepSeek V4 (likely released before the lunar new year) will showcase the Engram architecture and act as a positive catalyst for the memory trade.

This has a doubly positive effect as it also helps defuse the “China Capacity” bear case. Because the Engram architecture allows labs to substitute restricted HBM with commodity memory to bypass sanctions, it wouldn’t surprise us to see aggressive adoption by Chinese hyperscalers. This creates a massive internal sink for domestic DRAM and NAND output (CXMT/YMTC), absorbing the very supply that bears feared would flood the global market.

Third, let’s look at why this confluence is happening right now. Two words: Claude Code. We are at a transition from ‘State-less Chatbots’ (which forget everything) to the ‘After Claude Code’ (’ACCel’) era of ‘State-full Agents’ (which maintain a persistent workspace).

While English text is somewhat repetitive, code is extensively repetitive. Think about what Clade Code does: it tries to fix a bug, fails, reads the error, tries again, edits a file, runs a test. This requires “Long-Term Working Memory.” BlueField/Engram allows this “Working Memory” to live on the DPU/NAND layer. This means an Agent can have a “Session State” that lasts for days without consuming expensive GPU HBM.

Even with SNDK up +100% YTD, we think the market isn’t fully grasping the implication of the announcements of the first three weeks of the year, largely going under the radar among the generalist community, and even some specialists. We really don’t hear many HFs owning this stock. While consensus views this as a standard cyclical memory trade, we see a massive architectural shift. The demand signals from Bluefield, Engram, and Claude Code/AI Agents represent a step-function change, not just continuation. SNDK now has the trifecta of cyclical + secular + structural tailwinds.

The memory narrative was already the cleanest in Tech, (even more so after INTC’s underwhelming print). The scarcity value of a clean narrative has already driven flows to the memory trade and we think these changes continue drive $ to these names. In this context, Mark Liu’s insider buy at MU on Friday with MU at all-time highs makes perfect sense — he gets the magnitude of this architectural shift.

Bulls here are now talking about $75+ in EPS in CY27 (If you flow through 100% q/q pricing increase, you already get $15-20 run rate quarterly run-rate). Uber bulls I talk to have thrown around a $90-100#. We’re unsure of where SNDK ends up, but like we said in the beginning: we think these changes mark one of the best structural fundamental “rate of changes” that I’ve seen in any industry in such amount of short time. Still, memory is a commodity business and SNDK is a high vol stock: market narratives and price action are unpredictable so we like using a tight stop with ST MAs on a trade like this. That’s how we’re trading this one - to each their own.

Look at Kioxia , the fab split btw KIOXIA-SNDK is 60-40% . Similar products they were supposed to merge when WDC owned SNDk.

Theoretical, KIOXIA should have a mkt cap 50% larger than SNDK given they should have more 50% output based on Fab/capez agreement yet it trades at 40% discount

The backwards compatability point is what caught my attention. If ICMSP racks work with existing Blackwell/Hopper setups via Spectrum-X ethernet, the NAND ramp could acutally lead the Vera Rubin cycle rather than follow it. That's a subtle but really important distinction for timing the trade. Also interesting how Engram's deterministic prefetch essentially neutralizes the latency penalty that kept NAND out of this tier before.